This is the short story: got a 'new' computer a few years ago. Turned it on, installed Linux, made all the updates, ready to go.

Monsoon storm zapped the ethernet on the motherboard. Couldn't get a USB ethernet adapter to work.

Few months later, tried another ethernet adapter and that worked. Finished all the updates and was ready to go. Oh, one last thing -- updated the operating system from Fedora 26 to Fedora 28. Did this, and then saw that the ethernet adapter wasn't working again. Threw in the towel -- put the machine in storage where it was for a couple years.

Got ANOTHER ethernet adapter. Pulled the machine out of storage and tried it. It worked right away. Updated python, got all the astronomical python libraries installed, etc, etc, etc. Upgraded from 2 to 8 GB of memory. Now I'm really rip-roaring ready to go!

Last weekend I planned on moving from my current 'work' computer to this 'new' one. Went to turn it on and ----- nothing. It won't turn on! Fans spin for about 1 second and then stop. From what I can tell, this means that something happened to the motherboard.

So now the machine is sitting on the floor giving me the finger (again!!) and I'm just staring at it.

Frustrating....

Independent Research Astronomer and Space Musician

Come with me and re-discover the universe!

More info via links on the right.

Saturday, June 20, 2020

Wednesday, June 17, 2020

Fire in the Catalinas (61" Kuiper, Catalina Sky Survey, etc)

Another big fire in the Catalina mountains north of Tucson. This mountain range is the home of many observatories, including the 61" scope on Mt Bigelow I used for my white dwarf photometry work back in the early 90's, and the Catalina Sky Survey on Mt Lemmon which is hunting for near-earth and earth-crossing asteroids. There isn't a lot of vegetation at the CSS site, so if they're vigilant it'll be ok as far as fire protection goes. The 61" (there's also a 16" Schmidt camera there), though, is surrounded by trees.

In both cases, the SMOKE is gonna be a big problem.

Take care of yourselves up there!

In both cases, the SMOKE is gonna be a big problem.

Take care of yourselves up there!

Thursday, June 11, 2020

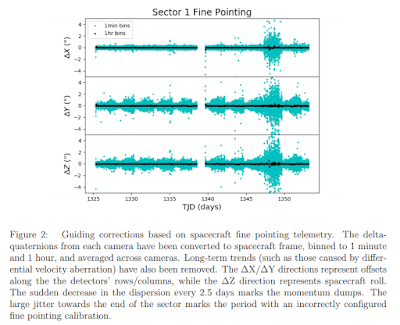

RTFM -- Tess Sector 1 Manual!

All I have to do is RTFM and many (maybe all???) of my TESS questions are answered!

https://archive.stsci.edu/missions/tess/doc/tess_drn/tess_sector_01_drn01_v02.pdf

Citizen Science rant that I almost posted to the SETI youtube channel ...

... but changed my mind and posted it here instead where it's guaranteed to never be seen by anyone except YOU!

Here is it:

Here is it:

The term 'Citizen Science' as it's currently being used is very deceptive. The person you interviewed is nothing more than a 'Data Collector'. Don't get me wrong! Data collection is VERY important and is very exciting and technical, but he's not doing science! They're fooled into thinking they're actually doing science by YOU calling it 'Citizen Science' to make what they are ACTUALLY doing sound a little more appealing. All they're doing is participating in the scientific process, part of which is the tedious process of data collection. Tedious AND expensive! So you fool these people into willingly and happily collect the data for you -- for free!!! Tom Sawyer did the same thing and got away with it just like you are. Brilliant.

Are any of these 'Citizen Scientists' co-authors or authors on any papers, or are they maybe just mentioned in the acknowledgements? If the former, then yes they are 'Citizen Scientists'. But if the latter, they are 'Data Collectors'.

Wednesday, June 10, 2020

Steady Stars

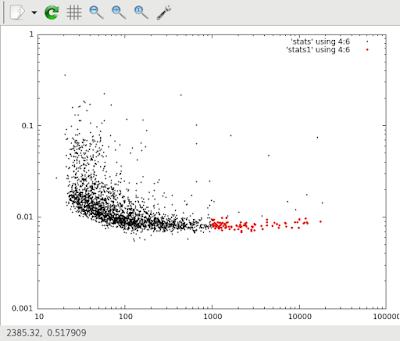

Here are stars in the Sector 1 field of view. Note that both axes in this plot are on a logarithmic scale. The x-axis is the brightness (larger numbers, brighter star). The y-axis is the 1-sigma standard deviation as a percentage of brightness (smaller number, more precise or more steady).

Here are the stars that fall into those categories (marked in red) have a brightness of more than 1000 and a percent deviation of less than 1%:

Here's a plot (on a linear scale) showing a sample of these stars -- the brightest ones (8 stars in this sample of about 2400 have values greater than 10000):

Very interesting that even these aren't so steady.

Not sure what's going on with the data from data points 1000-1100. Also not sure why there's a discontinuity in all the data about mid-way through. There may be other systematic things that I need to understand and make sure I take into account.

I need to look at some fainter stars that are also steady to see how those light curves look.

Here are the stars that fall into those categories (marked in red) have a brightness of more than 1000 and a percent deviation of less than 1%:

Here's a plot (on a linear scale) showing a sample of these stars -- the brightest ones (8 stars in this sample of about 2400 have values greater than 10000):

Very interesting that even these aren't so steady.

Not sure what's going on with the data from data points 1000-1100. Also not sure why there's a discontinuity in all the data about mid-way through. There may be other systematic things that I need to understand and make sure I take into account.

I need to look at some fainter stars that are also steady to see how those light curves look.

Monday, June 8, 2020

TESS Overload

I just ran a script that took my machine 21 hours to complete! It measured the brightness of about 2200 stars (identified by the IRAF task 'starfind') in a 1000x1000 pixel area (translating to 6.3 by 6.3 degrees in the sky) of Sector 1, Camera 1, CCD 1. I did this for the entire data acquisition period, which went from day 206 to day 234 of 2018 (29 days!!).

I measured the brightness by the computing the mean and mode of a small sample box centered on the star. The mean value is the brightness of the star plus the background. I noticed quite a while ago that the background does change. I need to investigate why, but for now I'm just accepting it as something that happens. The background in my small box is estimated by computing the mode -- the most common value. The star takes up 3 or 4 of the 49 pixels I'm sampling. The other pixels are part of the background and will be the most common value. To say it in stat verbage: the distribution of brightness is very non-gaussian.

So by subtracting the mode from the mean, I get a rough measurement of the brightness of the star with no varying background.

What I see it astonishing but totally expected! I made some plots. As I said, there are over 2000 stars, so there are 2000+ plots! I put five plots on a single image, so that's only a little over 400 images. Here are some examples to show the diversity. X-axis is image number which will track time. Every point is 30 minutes after the previous one. The y-axis is self-scaled to whatever the data is telling gnuplot is the min and max.

And actually I'm interested in all of this for something completely in left field -- but I think is very important that no-one is talking about.

Which stars vary the least, or are closest to constant? Do 'known' and used standard stars used in photometric measurements show any variation on these timescales? I'm gonna see if there are any Landolt standards in this CCD's field of view.

Looking at all of these plots, there are only a handful that are totally flat (with noise, of course). Are 'constant' stars rare? What conditions make stars 'constant'?

All kinds of interesting questions -- and no one seems to be talking about it.

Anyhow I'm very pleased with this simple and very interesting experiment! Except for the search for the most constant star, I have no idea where this will lead.

I measured the brightness by the computing the mean and mode of a small sample box centered on the star. The mean value is the brightness of the star plus the background. I noticed quite a while ago that the background does change. I need to investigate why, but for now I'm just accepting it as something that happens. The background in my small box is estimated by computing the mode -- the most common value. The star takes up 3 or 4 of the 49 pixels I'm sampling. The other pixels are part of the background and will be the most common value. To say it in stat verbage: the distribution of brightness is very non-gaussian.

So by subtracting the mode from the mean, I get a rough measurement of the brightness of the star with no varying background.

What I see it astonishing but totally expected! I made some plots. As I said, there are over 2000 stars, so there are 2000+ plots! I put five plots on a single image, so that's only a little over 400 images. Here are some examples to show the diversity. X-axis is image number which will track time. Every point is 30 minutes after the previous one. The y-axis is self-scaled to whatever the data is telling gnuplot is the min and max.

|

| 2nd from the top is an eclipsing binary. |

|

| 4th from the top is a very complex pattern |

|

| Top one low amp, high freq. 4th from top is complex again |

And actually I'm interested in all of this for something completely in left field -- but I think is very important that no-one is talking about.

Which stars vary the least, or are closest to constant? Do 'known' and used standard stars used in photometric measurements show any variation on these timescales? I'm gonna see if there are any Landolt standards in this CCD's field of view.

Looking at all of these plots, there are only a handful that are totally flat (with noise, of course). Are 'constant' stars rare? What conditions make stars 'constant'?

All kinds of interesting questions -- and no one seems to be talking about it.

Anyhow I'm very pleased with this simple and very interesting experiment! Except for the search for the most constant star, I have no idea where this will lead.

Sunday, May 10, 2020

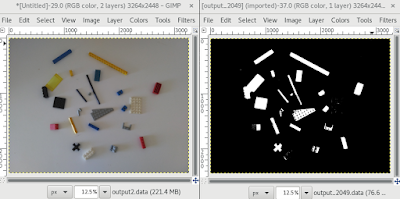

Different Lighting for Lego image

Tried the flash on my camera instead of room lighting. Here are the results. Input is a 1200x1200 pixel image.

Input:

Output (centroids marked with white dots):

Input:

Output (centroids marked with white dots):

Here's that big blue rectangle on the right, 'extracted' from the image and put into it's own, along with it's RGB histograms:

As you can see from the histogram, all of the blue values are larger than red and green, and therefore the color is 'blue'. Also notice that the number of pixels is an indication of size.

So with one histogram, I can know approximate color and size. How well does this translate to picking a particular object or color is TBD.

... and now I'm finding the 'corners' of each found object. With this I can compute various parameters like the length of each side, the area, and the orientation / rotation wrt to vertical or horizontal. Small green dots mark the 'corners' and purple dots are the centroids.

Best yet! 47 total objects. It got everything but the white pieces. Pretty decent color averages, too.

and the input again for comparison:

Each object is marked with four small green dots, showing its corners. 47 total objects found, indexed, and stored ready to be used as input to the next stage. There are actually a total of 48 pieces. I missed two, but I also have one false positive (near the glary part lower center). The software found 47 objects, missed two white pieces (49) and fp-ed one to give a count of 48. Correct!!!

Saturday, May 9, 2020

Lego Project Update

I've almost got object finding completed. There's a bug in the algorithm somewhere that is making some interesting results.

I start with this image as input:

The individual objects that the software finds is color coded. So all the red pixels above are part of one 'object'. Same with green and purple. Notice, however, that there's some strange stuff going on.

One part of the definition of an 'object' is that the pixels have to all be connected. The software counted three objects in the input image. I'm sure you notice that there's some funny stuff going on with the red and green pixels on the right. The reds, for instance, are all part of the same object -- but that stuff on the right clearly isn't connected to the red pixels on the left! Not sure what's going on here. I'm using a recursive algorithm to find those pixels that are part of an 'object', but clearly there's something going wrong. It's hard to see, but there are some green pixels around the purple object, too.

Once I get the object locator code working, I can turn to object identification. The first thing I'll try to do is identify color. I'll try to do it by brute force at first (conditional statements in the code like if red and green are nearly equal, then the color is probably yellow), but I'm thinking that this'll be my first opportunity to use a neural network for the color identification.

UPDATE

LOL after writing the above, I realized what I'd done wrong on the code. I fixed that with these results:

I've jumped forward to writing out centered found objects to individual images. So I start with this different image:

So here are three found objects. The little black dots show the centers of each one, proving that the code is finding individual objects.

I've jumped forward to writing out centered found objects to individual images. So I start with this different image:

... and end up with these four extracted images showing four found objects. These images can be used as input to a trained neural net or whatever:

|

| Object 1 |

|

| Object 2 |

|

| Object 3 |

|

| Object 4 |

Kinda rough, but not too bad for a first try.

As you can see, it didn't pick up the white piece next to the red. That's mainly because I'm picking out these objects by measuring their contrast to the background. A white Lego on a white background makes it hard to pick out. I'll either need to use a different background (a non-Lego color), or change how I find the individual objects.

So the next step is color identification. As I said, I'm gonna try hard-coding with RGB thresholding to see if I can measure colors that way. If not, I'll turn to a neural net solution.

The step after that is object identification. This seems to be where everyone gets stuck. I have no idea if I will, so I'm proceeding.

MORE Update

Ahhhh! I can't stop! Here's an image with a few more objects. The code found 8 objects, which I'd say that five are useable for matching. It's also 1000x1000 pixels -- the largest array sofar.

More more update:

I've taken a new image of some close-packed legos to see how many my software can find. This is, again, a 1000x1000 array. It found 41 objects in this image! Not too bad!

LOL looks like it missed all the gray pieces. Again, because of their closeness to the background. Each object is marked with a red dot. Notice all the spaces?

Average colors (dot) of found objects:

MORE Update

Ahhhh! I can't stop! Here's an image with a few more objects. The code found 8 objects, which I'd say that five are useable for matching. It's also 1000x1000 pixels -- the largest array sofar.

|

| probably not usable |

|

| probably not usable |

|

| probably not usable |

More more update:

I've taken a new image of some close-packed legos to see how many my software can find. This is, again, a 1000x1000 array. It found 41 objects in this image! Not too bad!

|

| Input Image |

|

| Found Objects |

LOL looks like it missed all the gray pieces. Again, because of their closeness to the background. Each object is marked with a red dot. Notice all the spaces?

Average colors (dot) of found objects:

Wow this next image is impressive. Here's each found object with its average color. Compare this to the input image and the similarity is pretty stricking!

Friday, May 8, 2020

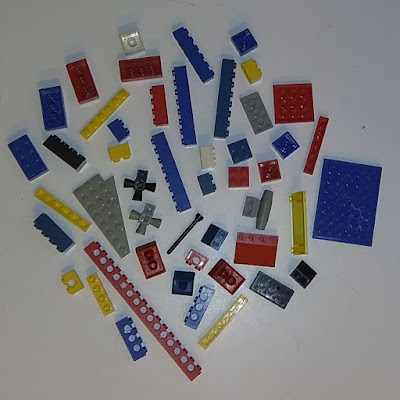

Lego Project

The very simple project I thought up a week or so ago, which was to build something that uses every single one of my Legos. This project has now exploded / expanded a little bit. My primary focus is to see how well I can create an automatic Lego piece sorting machine. This is the first step:

Thinking Lego. Writing code this weekend to number the objects (tagging) seen in this image. I probably won't get it done. Identifying and tagging pieces, and then classifying and sorting them according to various rules will come later. I plan to use 'Deep Reinforcement Learning', which is a multi-level neural network that operates by maximizing a reward parameter.

Why are there no Lego automatic sorting machines that actually work and will sort in many different ways?????

There are quite a few ways to sort Legos. I need to come up with at least a few ways so that when I'm ready to starting working on that part I will have thought about it a little beforehand.

Why are there no Lego automatic sorting machines that actually work and will sort in many different ways?????

There are quite a few ways to sort Legos. I need to come up with at least a few ways so that when I'm ready to starting working on that part I will have thought about it a little beforehand.

One of the interesting tangents is looking at the color information in an interesting way. Each channel: red, green, and blue, can have a value between 0 and 255 for each pixel. So I can create a histogram of the distribution of red, green, and blue of any image. The plots below use the left image above as input. I can also look at the distribution of red+green (yellow), red+blue (violet), and green+blue (cyan). I can plot these distributions with the y-axis being a logarithmic scale, and I get this:

But that's just a nice tangent, which might be helpful for training the neural net.

Thursday, April 16, 2020

Starting Soon

I should also mention that this observing program begins on Monday, 20 April 2020. That's pretty much the first day that Jupiter and Saturn are sufficiently high in the sky (about 20 degrees elevation and rising) to get some decent data. Saturn will still be a little low at first, but it's right there so why not?

I also made a list of eclipse events for HW Vir, which is currently my favorite EB system. Here they are for the next week or so that I'll be able to see between 10h and 11h UTC:

S 20 Apr 2020 10:52

P 21 Apr 2020 10:41

S 22 Apr 2020 10:29

P 23 Apr 2020 10:18

S 24 Apr 2020 10:07

S 27 Apr 2020 10:57

where 'P' and 'S' are Primary eclipse and Secondary eclipse.

I'm observing between 10 and 11h UTC because that's when Saturn and Jupiter are up. Iapetus will be in a perfect location (almost as far away from Saturn as it can be):

The reason I need Iapetus to be far away from Saturn is that 1 or 2 second exposures are required for this target. It's a faint visual magnitude 12. Doing that long of an exposure with Saturn in the FOV probably wouldn't hurt the CCD, but wow it would be incredibly overexposed. So all of this data will not include Saturn, but will hopefully contain a bright reference star most of the time. Iapetus is sufficiently far away from Saturn during most of its orbit to make this project possible.

If I get other moons in the FOV, that'll be bonus.

I also made a list of eclipse events for HW Vir, which is currently my favorite EB system. Here they are for the next week or so that I'll be able to see between 10h and 11h UTC:

S 20 Apr 2020 10:52

P 21 Apr 2020 10:41

S 22 Apr 2020 10:29

P 23 Apr 2020 10:18

S 24 Apr 2020 10:07

S 27 Apr 2020 10:57

where 'P' and 'S' are Primary eclipse and Secondary eclipse.

I'm observing between 10 and 11h UTC because that's when Saturn and Jupiter are up. Iapetus will be in a perfect location (almost as far away from Saturn as it can be):

The reason I need Iapetus to be far away from Saturn is that 1 or 2 second exposures are required for this target. It's a faint visual magnitude 12. Doing that long of an exposure with Saturn in the FOV probably wouldn't hurt the CCD, but wow it would be incredibly overexposed. So all of this data will not include Saturn, but will hopefully contain a bright reference star most of the time. Iapetus is sufficiently far away from Saturn during most of its orbit to make this project possible.

If I get other moons in the FOV, that'll be bonus.

Wednesday, April 15, 2020

Research Direction(s)

Iapetus: photometric light curve (brightness versus orbital phase, brightness versus time)

Galilean moons: photometric light curves (brightness versus orbital phase, brightness versus time)

Eclipsling binary star systems: topic TBD

For the moon photometry, the idea is to keep the data collection short and sweet. There's no particular reason to sit on the targets more than about 10 minutes. That'll allow me enough time to get a decent statistical sample while all the moons are at a single orbital phase. So observing Saturn and Jupiter will literally take about 30 minutes plus setup time.

Along with that, I'll collect photometric data on a selection of eclipsing binary stars. I just got the ebook: "Eclipsing Binary Stars: Modeling and Analysis" by Josef Kallrath and Eugene F. Milone. It seems comprehensive enough to be able to give me a pretty decent idea about what direction I want to take and what data will have to be acquired either by myself or from various surveys.

The computer saga: Let me tell you a computer story. About two years ago I bought a fairly new computer to replace one I've had for a while that I've been using as my 'research computer'. This is where I have all of my working data, along with all my software to do the data reduction and analysis. The new computer arrived and within the first week the ethernet adapter (on the motherboard) got zapped by a lightening strike. So there was no way to have a wired ethernet connection. Ok, so I switch to wifi with a little USB ethernet dongle. I got the computer set up and configured, and installed all the python stuff so I could start my switch from IRAF to the various astronomical python packages that are now available.

One day the system asked if I wanted to upgrade the OS. I figured this was a good time to do that, so I went ahead with that. After the upgrade, no matter what I did I couldn't get the wifi to work! So now I have a pretty decent machine but no reasonable way to get data in and out of it!

I found another computer that had windows 7 on it. I decided to replace the hard drive with the one from my new computer. This went well for a couple days until THIS computer decided that it didn't want to power up anymore. It just sat there and clicked!

Needless to say, I was pretty bummed out. So I put all these computers away and sort of forgot about them. Thinking about this new research and still wanting a newer computer to do the work, I started thinking about either getting yet another new computer, or figuring out how to cobble together something with the pieces I have.

I also had acquired a new wifi USB dongle.

This past weekend I pulled everything out. I put the original new machine back together (less one memory stick which I can't find, so I only have 2 GB of memory at the moment), plugged in the new wifi adapter and VOILA the machine came up and connected to my wifi! So yay now I have a working machine with some decent processing power. I'd like to get at least 8 GB of memory on this machine.

Galilean moons: photometric light curves (brightness versus orbital phase, brightness versus time)

Eclipsling binary star systems: topic TBD

For the moon photometry, the idea is to keep the data collection short and sweet. There's no particular reason to sit on the targets more than about 10 minutes. That'll allow me enough time to get a decent statistical sample while all the moons are at a single orbital phase. So observing Saturn and Jupiter will literally take about 30 minutes plus setup time.

Along with that, I'll collect photometric data on a selection of eclipsing binary stars. I just got the ebook: "Eclipsing Binary Stars: Modeling and Analysis" by Josef Kallrath and Eugene F. Milone. It seems comprehensive enough to be able to give me a pretty decent idea about what direction I want to take and what data will have to be acquired either by myself or from various surveys.

The computer saga: Let me tell you a computer story. About two years ago I bought a fairly new computer to replace one I've had for a while that I've been using as my 'research computer'. This is where I have all of my working data, along with all my software to do the data reduction and analysis. The new computer arrived and within the first week the ethernet adapter (on the motherboard) got zapped by a lightening strike. So there was no way to have a wired ethernet connection. Ok, so I switch to wifi with a little USB ethernet dongle. I got the computer set up and configured, and installed all the python stuff so I could start my switch from IRAF to the various astronomical python packages that are now available.

One day the system asked if I wanted to upgrade the OS. I figured this was a good time to do that, so I went ahead with that. After the upgrade, no matter what I did I couldn't get the wifi to work! So now I have a pretty decent machine but no reasonable way to get data in and out of it!

I found another computer that had windows 7 on it. I decided to replace the hard drive with the one from my new computer. This went well for a couple days until THIS computer decided that it didn't want to power up anymore. It just sat there and clicked!

Needless to say, I was pretty bummed out. So I put all these computers away and sort of forgot about them. Thinking about this new research and still wanting a newer computer to do the work, I started thinking about either getting yet another new computer, or figuring out how to cobble together something with the pieces I have.

I also had acquired a new wifi USB dongle.

This past weekend I pulled everything out. I put the original new machine back together (less one memory stick which I can't find, so I only have 2 GB of memory at the moment), plugged in the new wifi adapter and VOILA the machine came up and connected to my wifi! So yay now I have a working machine with some decent processing power. I'd like to get at least 8 GB of memory on this machine.

Subscribe to:

Posts (Atom)